Date : February 28, 2025

Time : 8:30 to 12:00 (MST)

Location : AZ Ballroom Salon 3

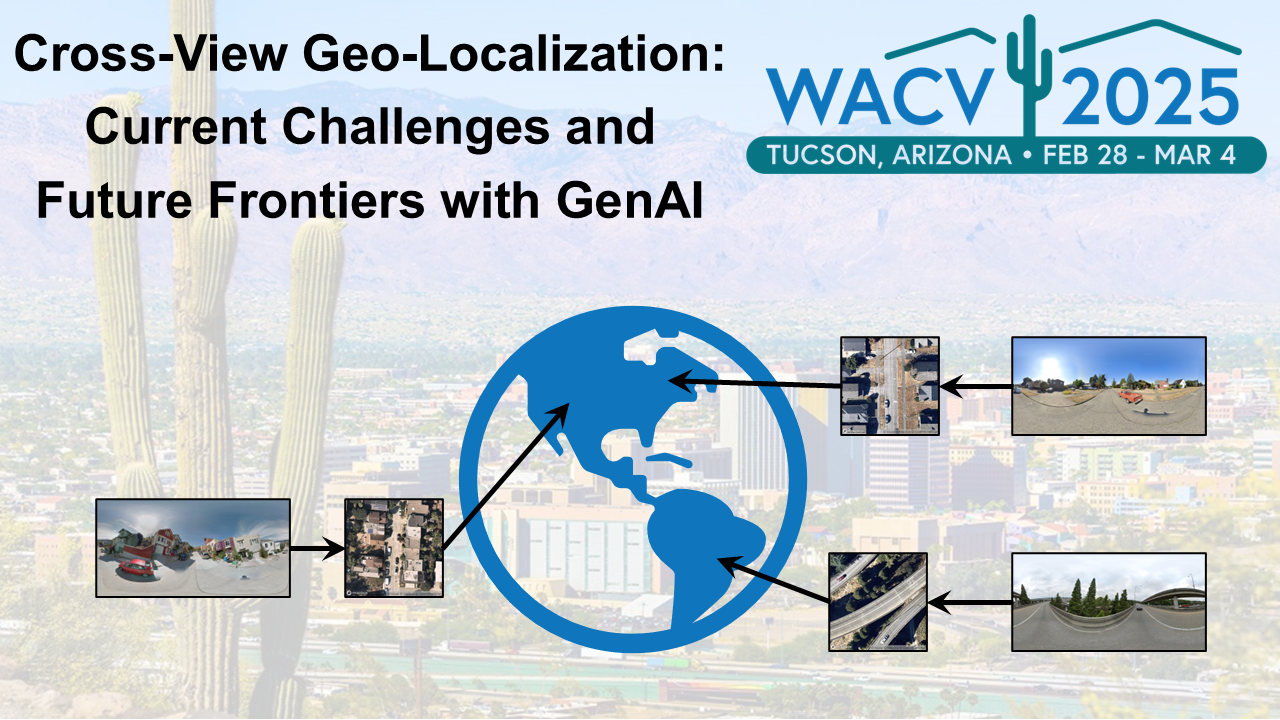

Tutorial Description

The availability of geospatial data from diverse sources and perspectives has expanded rapidly in recent years, unlocking opportunities across various fields. Cross-view geo-localization—matching ground images with aerial or satellite views to pinpoint geographical locations—has become a critical area of research due to its diverse applications in autonomous navigation, urban planning, and augmented reality. Despite substantial advancements, this field faces significant challenges, including handling extreme viewpoint variations, reliability, and integration of multimodal information across diverse perspectives. Recent developments in Generative AI (GenAI), including Large Vision-Language Models (LVLMs), have introduced more generalized approaches to incorporate multimodality data. These advancements not only enhance performance but also redefine how multimodality is utilized in real-world settings. As the field evolves rapidly, this tutorial offers an ideal opportunity for researchers—especially those new to the domain—to grasp the latest methodologies, explore cutting-edge datasets, and understand emerging trends that will shape the future of cross-view geo-localization.

Organizers

- Chen Chen, University of Central Florida, Orlando, FL, USA

- Safwan Wshah, University of Vermont, Burlington, VT, USA

- Xiaohan Zhang, University of Vermont, Burlington, VT, USA

Speakers

- Rakesh “Teddy” Kumar, SRI International, Menlo Park, CA, USA

- Zhedong Zheng, University of Macau, Macau, China

Schedule

-

8:30 - 8:40: Welcome

-

8:40 - 9:20: Multimedia UAVs: Capturing the World from a New Perspective

Speaker: Zhedong Zheng

-

9:20 - 10:00: GenAI for cross-view geo-localization

Speaker: Safwan Wshah

-

10:00 - 10:20: Coffee Break

-

10:20 - 11:00: Geo-localization of ground and aerial imagery by cross-view alignment to overhead reference

Speaker: Rakesh “Teddy” Kumar

-

11:00 - 11:40: Large Vision-Language models for cross-view geo-localization

Speaker: Chen Chen

-

11:40 - 12:00: Panel Discussion

Covered Publications

- X. Zhang, X. Li, W. Sultani, Y. Zhou, and S. Wshah, “Cross-View Geo-Localization via Learning Disentangled Geometric Layout Correspondence,” AAAI, vol. 37, no. 3, pp. 3480–3488, Jun. 2023, doi: 10.1609/aaai.v37i3.25457.

- X. Zhang, W. Sultani, and S. Wshah, “Cross-View Image Sequence Geo-Localization,” presented at the Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 2914–2923.

- A. Arrabi, X. Zhang, W. Sultani, C. Chen, and S. Wshah, “Cross-View Meets Diffusion: Aerial Image Synthesis with Geometry and Text Guidance,” Aug. 20, 2024, arXiv: arXiv:2408.04224. doi: 10.48550/arXiv.2408.04224.

- N. C. Mithun, K. S. Minhas, H.-P. Chiu, T. Oskiper, M. Sizintsev, S. Samarasekera, and R. Kumar, “Cross-View Visual Geo-Localization for Outdoor Augmented Reality,” in 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Mar. 2023, pp. 493–502. doi: 10.1109/VR55154.2023.00064.

- X. Zhang, X. Li, W. Sultani, C. Chen, and S. Wshah, “GeoDTR+: Toward Generic Cross-View Geolocalization via Geometric Disentanglement,” IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1–19, 2024, doi: 10.1109/TPAMI.2024.3443652.

- D. Wilson, X. Zhang, W. Sultani, and S. Wshah, “Image and Object Geo-Localization,” Int J Comput Vis, vol. 132, no. 4, pp. 1350–1392, Apr. 2024, doi: 10.1007/s11263-023-01942-3.

- M. Chu, Z. Zheng, W. Ji, T. Wang, and T.-S. Chua, “Towards Natural Language-Guided Drones: GeoText-1652 Benchmark with Spatial Relation Matching”.

- Z. Zheng, Y. Wei, and Y. Yang, “University-1652: A Multi-view Multi-source Benchmark for Drone-based Geo-localization,” in Proceedings of the 28th ACM International Conference on Multimedia, in MM ’20. New York, NY, USA: Association for Computing Machinery, Oct. 2020, pp. 1395–1403. doi: 10.1145/3394171.3413896.

Content: Xiaohan Zhang 2024.

Theme: workshop-template-b by evanwill is built using Jekyll on GitHub Pages. The site is styled using Bootstrap.